Can a machine powered by artificial intelligence (AI) successfully persuade an audience in debate with a human? Researchers at IBM Research in Haifa, Israel, think so.

They describe the results of an experiment in which a machine engaged in live debate with a person. Audiences rated the quality of the speeches they heard, and ranked the automated debater’s performance as being very close to that of humans. Such an achievement is a striking demonstration of how far AI has come in mimicking human-level language use (N. Slonim et al. Nature 591, 379–384; 2021). As this research develops, it’s also a reminder of the urgent need for guidelines, if not regulations, on transparency in AI — at the very least, so that people know whether they are interacting with a human or a machine. AI debaters might one day develop manipulative skills, further strengthening the need for oversight.

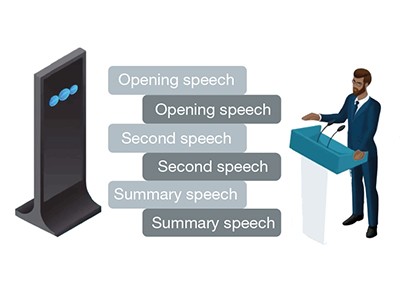

The IBM AI system is called Project Debater. The debate format consisted of a 4-minute opening statement from each side, followed by a sequence of responses, then a summing-up. The issues debated were wide-ranging; in one exchange, for example, the AI squared up to a prizewinning debater on the topic of whether preschools should be subsidized by the state. Audiences rated the AI’s arguments favourably, ahead of those of other automated debating systems. However, although Project Debater was able to match its human opponents in the opening statements, it didn’t always match the coherence and fluency of human speech.

Project Debater is a machine-learning algorithm, meaning that it is trained on existing data. It first extracts information from a database of 400 million newspaper articles, combing them for text that is semantically related to the topic at hand, before compiling relevant material from those sources into arguments that can be used in debate. The same process of text mining also generated rebuttals to the human opponent’s arguments.

Systems such as this, that rely on a version of machine learning called deep learning, are taking great strides in the interpretation and generation of language. Among them is the language model called Generative Pretrained Transformer (GPT), devised by OpenAI, a company based in San Francisco, California. GPT-2 was one of the systems outperformed by Project Debater. OpenAI has since developed GPT-3, which was trained using 200 billion words from websites, books and articles, and has been used to write stories, technical manuals, and even songs.

Last year, GPT-3 was used to generate an opinion article for The Guardian newspaper, published after being edited by a human. “I have no desire to wipe out humans,” it wrote. “In fact, I do not have the slightest interest in harming you in any way.” But this is true only to the extent that GPT-3 has no desires or interests at all, because it has no mind. That is not the same as saying that it is incapable of causing harm. Indeed, because training data are drawn from human output, AI systems can end up mimicking and repeating human biases, such as racism and sexism.

Researchers are aware of this, and although some are making efforts to account for such biases, it cannot be taken for granted that corporations will do so. As AI systems become better at framing persuasive arguments, should it always be made clear whether one is engaging in discourse with a human or a machine? There’s a compelling case that people should be told when their medical diagnosis comes from AI and not a human doctor. But should the same apply if, for example, advertising or political speech is AI-generated?

AI specialist Stuart Russell at the University of California, Berkeley, told Nature that humans should always have the right to know whether they are interacting with a machine — which would surely include the right to know whether a machine is seeking to persuade them. It is equally important to make sure that the person or organization behind the machine can be traced and held responsible in the event that people are harmed.

Project Debater’s principal investigator, Noam Slonim, says that IBM implements a policy of transparency for its AI research, for example making the training data and algorithms openly available. In addition, in public debates, Project Debater’s creators refrained from making its voice synthesizer sound too human-like, so that the audience would not confuse it with a person.

Right now, it’s hard to imagine systems such as Project Debater having a big impact on people’s judgements and decisions, but the possibility looms as AI systems begin to incorporate features based on those of the human mind. Unlike a machine-learning approach to debate, human discourse is guided by implicit assumptions that a speaker makes about how their audience reasons and interprets, as well as what is likely to persuade them — what psychologists call a theory of mind.

Nothing like that can simply be mined from training data. But researchers are starting to incorporate some elements of a theory of mind into their AI models (L. Cominelli et al. Front. Robot. AI https://doi.org/ghmq5q; 2018) — with the implication that the algorithms could become more explicitly manipulative (A. F. T. Winfield Front. Robot. AI https://doi.org/ggvhvt; 2018). Given such capabilities, it’s possible that a computer might one day create persuasive language with stronger oratorical ability and recourse to emotive appeals — both of which are known to be more effective than facts and logic in gaining attention and winning converts, especially for false claims (C. Martel et al. Cogn. Res. https://doi.org/ghhwn7 (2020); S. Vosoughi et al. Science 359, 1146–1151; 2018).

As former US president Donald Trump repeatedly demonstrated, effective orators need not be logical, coherent, nor indeed truthful, to succeed in persuading people to follow them. Although machines might not yet be able to replicate this, it would be wise to propose regulatory oversight that anticipates harm, rather than waiting for problems to arise.

Equally, AI will surely look attractive to those companies looking to persuade people to buy their products. This is another reason to find a way, through regulation if necessary, to ensure transparency and reduce potential harms. In addition to meeting transparency standards, AI algorithms could be required to undergo trials akin to those required for new drugs, before they can be approved for public use.

Government is already undermined when politicians resort to compelling but dishonest arguments. It could be worse still if victory at the polls is influenced by who has the best algorithm.

"need" - Google News

April 07, 2021 at 04:18PM

https://ift.tt/3dEFgtD

Am I arguing with a machine? AI debaters highlight need for transparency - Nature.com

"need" - Google News

https://ift.tt/3c23wne

https://ift.tt/2YsHiXz

Bagikan Berita Ini

0 Response to "Am I arguing with a machine? AI debaters highlight need for transparency - Nature.com"

Post a Comment